FTC Fraudulent Marketing Analysis

Please note: I worked on this project during a two month period as a Research Assistant for Empirical Marketing Professor Anita Rao at the University of Chicago Booth School of Business, but unfortunately due to time constraints with my class schedule, part-time internship, and extracurricular commitments I was unable to finish the project.

Here is a link to the GitHub repo containing the code I used to parse user web-browsing data.

— Rao, Wang | Demand for ‘Healthy’ Products: False Claims and FTC Regulation

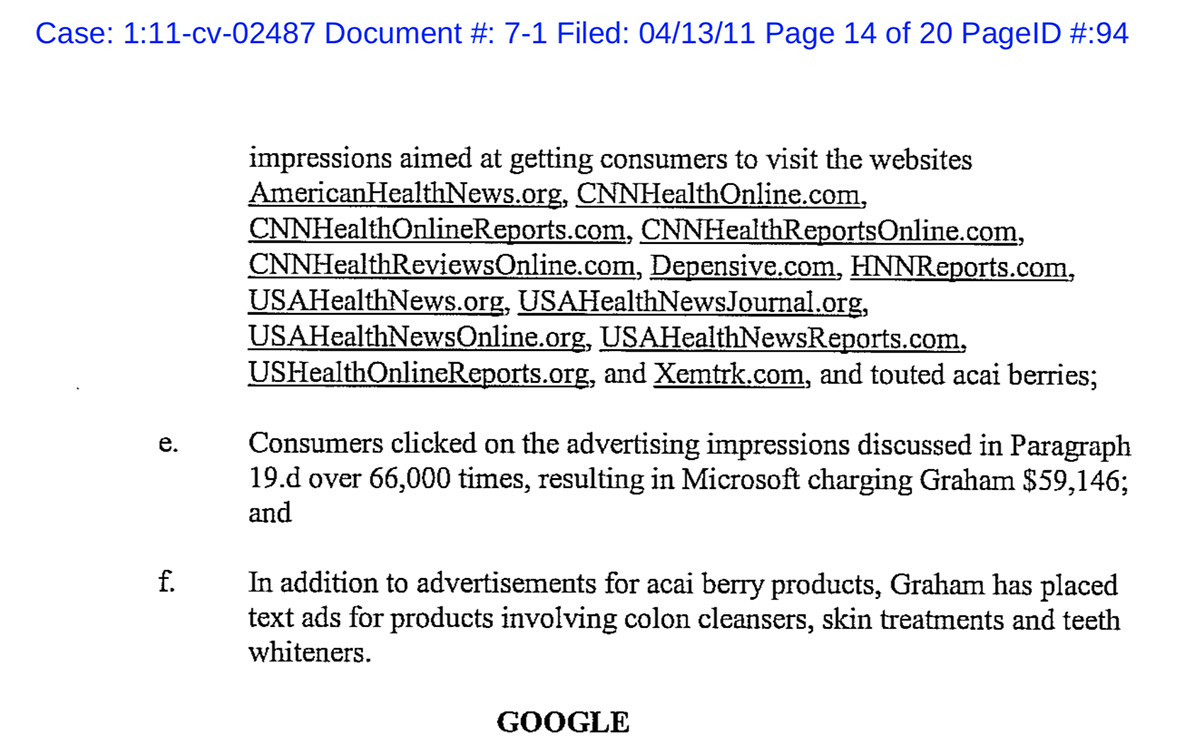

In 2011, the Federal Trade Commission (FTC) went after 10 companies purporting to sell “acai-berry weight-loss supplements” that could help consumers lose weight rapidly. While the FDA does not regulate supplement marketing, the FTC based its sting operation around “fake news websites” these companies created that mimicked real news media outlets. These fake news websites contained affiliate links redirecting to the weight loss supplement websites. The redirects deceived consumers into thinking that the weight loss supplements were supported and tested by mainstream news outlets.

The research was aimed at understanding how effective the sting operation was at protecting consumers against fraudlent marketing companies. To do so, we used Nielsen Data that contained monthly user browsing histories across hundreds of devices accessing these fake news websites.

User browsing history files (~2GB each) are large and difficult to work with. I created several Python scripts to help me with the process, and gained proficiency using Python’s Pandas library.

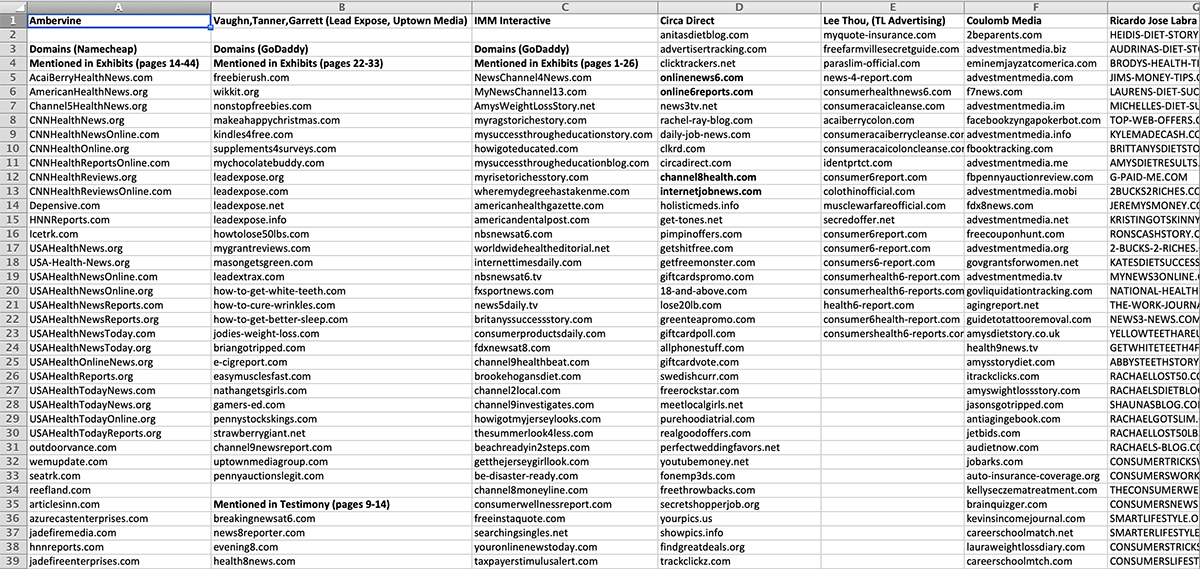

I also used Bloomberg Law to research each of the 10 lawsuits in-depth to understand which fake news domains I needed to be on the lookout for. During this time, I was curious about the prospect of Law School and had taken the LSAT, so I found reading court document briefs to be rather fun.

Here’s the full list of everything I found!

I didn’t commit the raw data .CSV to the GitHub because of its sheer size (2GB) and because it is proprietary to Nielsen, but you can see the parsed data here if you’re interested for January 2011.

Here’s the link to that GitHub again if you’re curious to see some of the code. My biggest takeaways from this project were learning how to work with large datasets, quickly reading court case briefs for important information (think Mike Ross from Suits USA), and creating an infrastructure to parse those datasets into meaningful analysis.